(Mis)adventures in retrocomputing, or how I killed and resurrected my Amiga 1000 in one evening.

I'm putting this out there into the world, just in case someone else manages to kill their Amiga in this way.

I've been messing around with an Amiga 1000 (the same one from my early childhood!) and lately have been experimenting with a Rejuvenator and a Parciero and some apparent incompatibilities thereof.

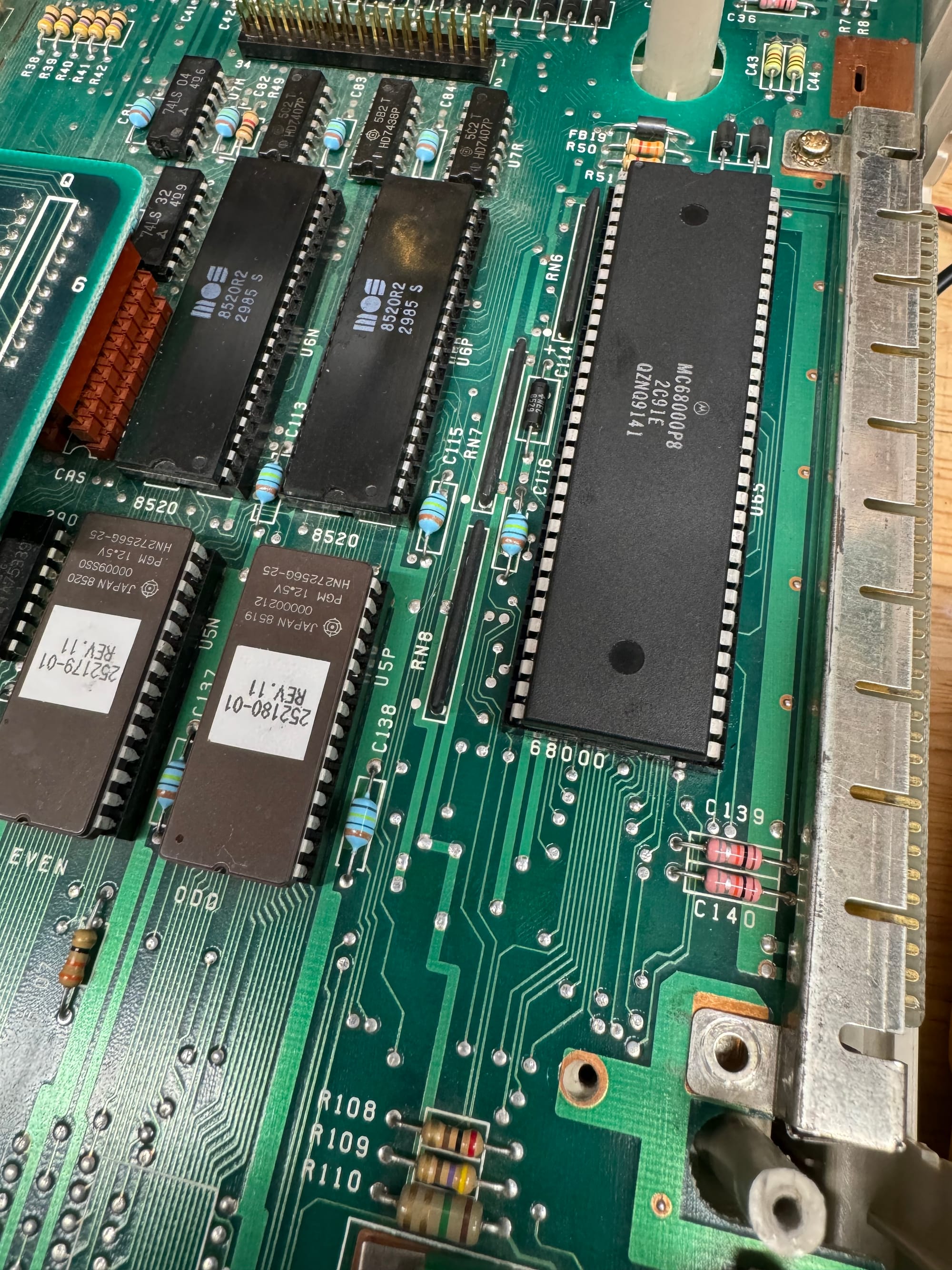

Anyway, as a troubleshooting step, I decied to try swapping out the 68000 CPU for another copy I had lying around, just to see if changing the CPU resolved the issue I was having.

Immediately after swapping the CPU, the Amiga would not boot. Black screen, no attempt to load from the floppy, and the power LED lit up but did not flash.

Weird, I thought, I'll just swap the CPU back.

Well, now neither CPU would boot, with the same symptoms.

I got out the oscilliscope. All the voltages looked correct, and there was a 7.8mhz clock signal on CLK. RST measured 0v, and HLT measured 3.3V (logic high).

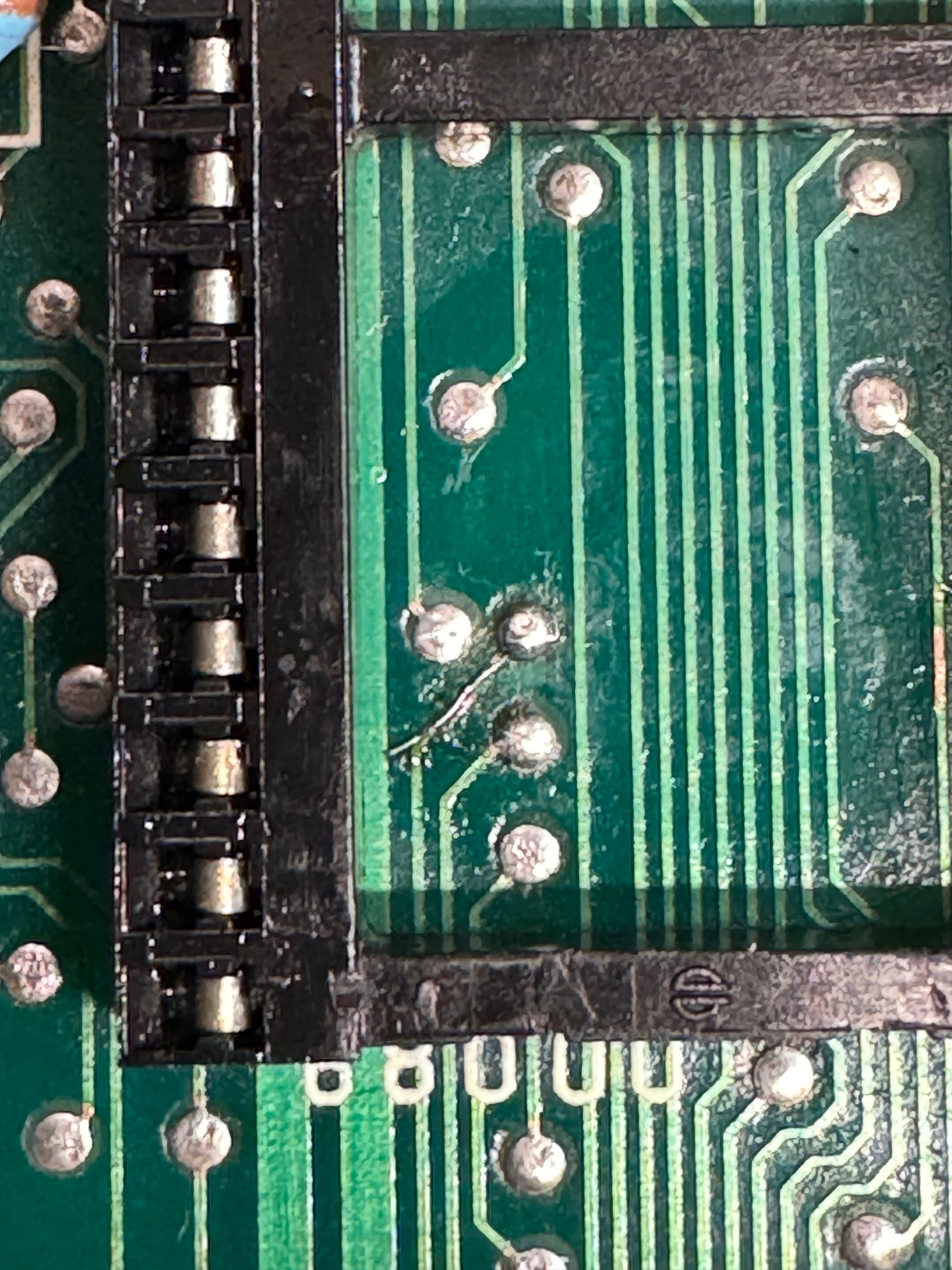

After much troubleshooting, including checking all the pins of the CPU with a multimeter, I discovered the problem: The little screwedriver I used to lever up the CPU out of the socket actually cut a trace on the PCB underneath the CPU. Of course the CPU was hiding the problem when the CPU was in place. Here's a photo of my repair job:

To fix the cut trace, I scraped a bit of the soldermask off with an exacto knife and soldered a tiny wire across the broken part.

After fixing that trace, the Amiga booted right up!

Here are some schematics, preserved here in case someone finds them helpful.

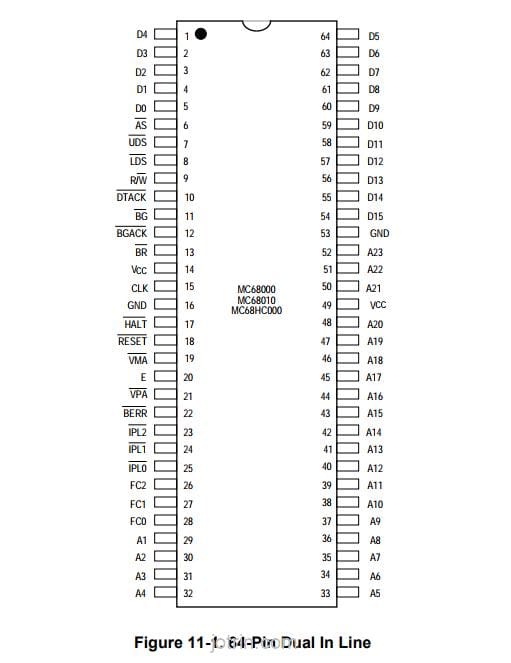

And the CPU pinout: